- AI with Dino Gane-Palmer

- Posts

- ⚡Last week, AI changed everything - 5 times

⚡Last week, AI changed everything - 5 times

From agentic browsers to AI glasses, AI is rewriting how we work.

Hi, and happy Monday.

Last week there were 5 big shifts that made me sit up and change my own routines.

Think of these as the emerging “new normal” .

1. Comet: The agentic browser is now free for everyone

Perplexity's Comet - an agentic browser that “browses” for you - was previously behind a $200/month paywall. Now it’s free.

This shift is noteworthy because this is an agentic browser that actually works. And now you can experience the magic yourself.

For example, while I was working on this newsletter I remembered that I needed to spend some time on LinkedIn researching former CEOs of dental practices in the US. (Yes, very niche! Let me know if you know of anyone).

I just opened the Assistant sidebar in Comet and gave it this LinkedIn assignment. It step-by-step proceeded with the task and came back with a list of profiles in the side bar.

The AI Assistant sidebar in Comet performing LinkedIn research for me.

This is a task that would normally require opening LinkedIn, doing various searches, opening several browser tabs, reviewing each one and eventually narrowing in on the ones of highest interest - something that would have taken at least an hour, if not two.

The real magic is the UI: I don’t need to watch it open browser windows or inspect its work. I continued to work on this newsletter in the main window. The side panel shows the work it is doing, before reporting back when it is done.

Comet effortlessly performs tasks ranging from rescheduling meetings to drafting emails - without ever forcing me to switch tabs.

This is the future of work.

2. The next form factor: glasses as AI displays

It’s not just browsers that are becoming our assistants.

While Meta has been selling AI enabled Ray Ban sunglasses for a while, last week was the first time you could purchase a version that includes a display within the glasses: in-lens screens that show messages, AI overlays, translations - all right in your field of vision.

Meta’s Ray Ban Displays. Source: Meta.

Why is this important?

Glasses are not encumbered with the legacy ways of working with computers - through keyboards, fixed screens and apps.

With glasses, AI is always present seeing and hearing what you see, right in your field of vision. This means answers can appear instantly without breaking focus or shifting attention away from people and tasks.

Meta’s Ray Ban are already popular. Adding in-lens display, they give us a glimpse of getting closer to AI becoming an invisible, reliable partner that assists everything we do, automatically.

3. AI that codes by itself for 30+ hours

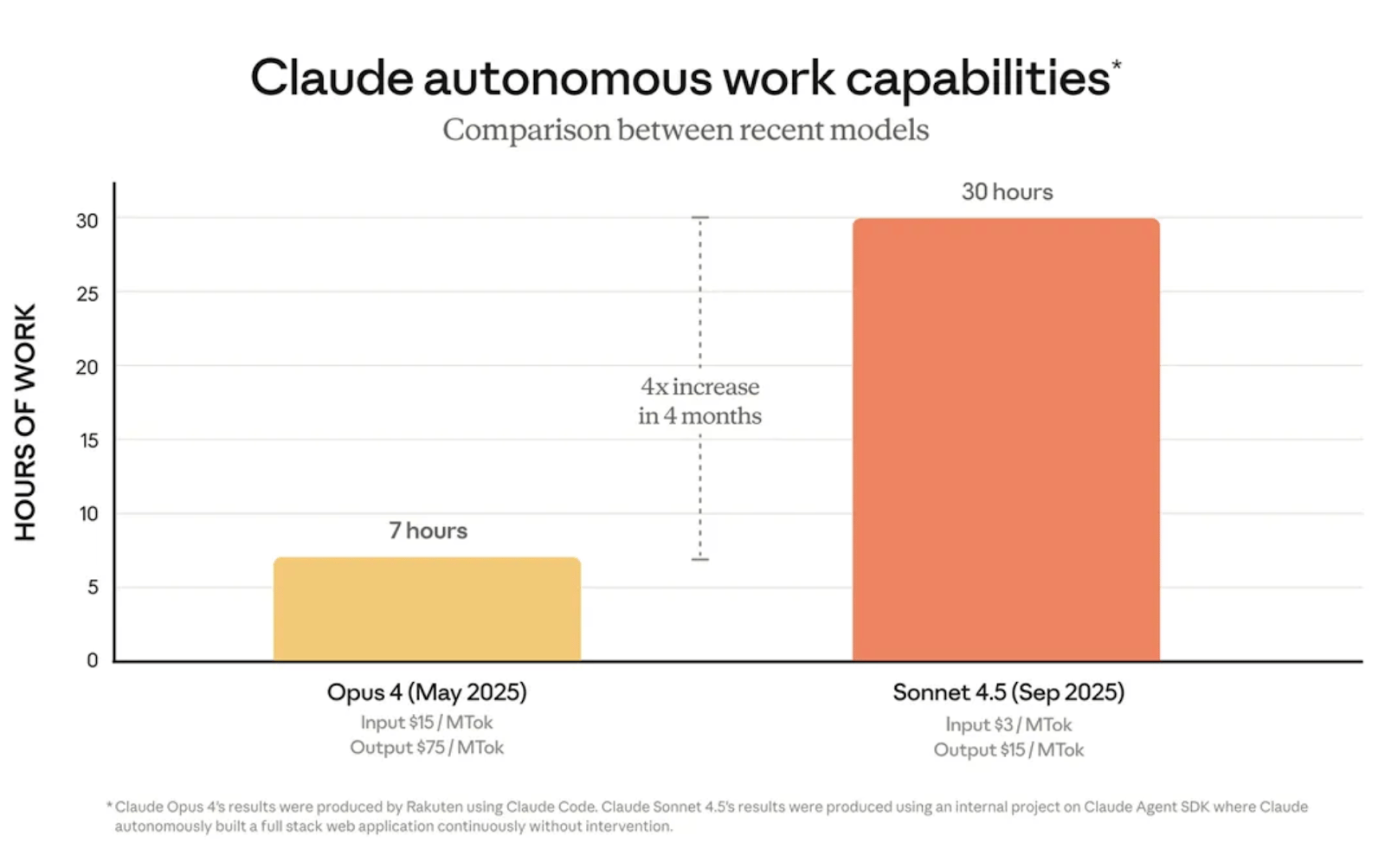

Anthropic dropped Claude Sonnet 4.5 - an AI model that can autonomously work on complex tasks (like code, debugging, integration) for 30 hours straight.

This means you can delegate extended projects, migrations, or deep research tasks, and the AI can maintain focus and coherence without human intervention.

Source: Anthropic.

Why is this important?

It’s a step toward true “AI colleagues” capable of sustained, high-value autonomous work, not just quick replies or short scripts.

Enterprises can assign complex jobs to AI that run for entire workdays or longer.

Think: assign a task Friday night, wake up Monday with a draft ready.

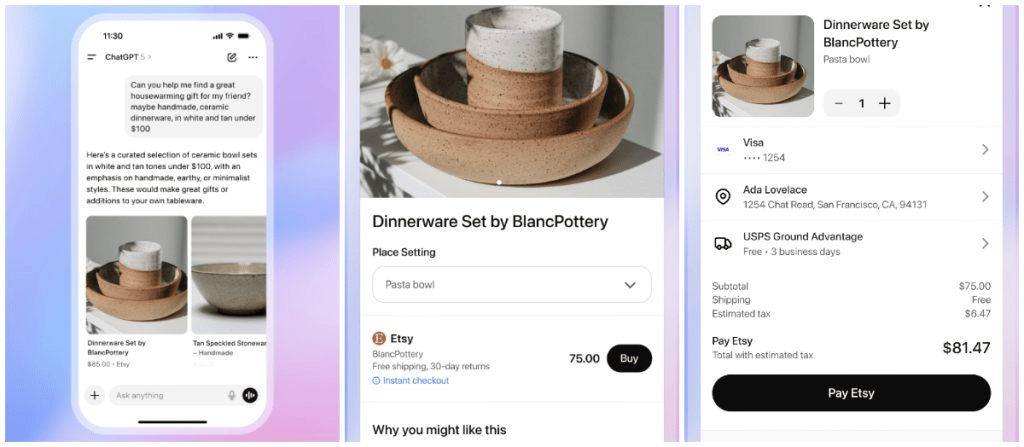

4. ChatGPT is now a storefront

ChatGPT’s new instant checkout feature. Source: OpenAI.

ChatGPT will now surface and sell products directly in conversation.

No need to leave the chat, and no need for multiple clicks.

The feature already works for Etsy listings. Over a million Shopify merchants will be available soon, with some large brands (such as Glossier, SKIMS, Spanx, and Vuori) mentioned as upcoming partners.

Buying directly inside ChatGPT, traditional digital marketing, SEO, and e-commerce will face some displacement. The journey from product discovery to product purchase is collapsing.

Enterprises must rethink their digital sales channels strategies.

5. All content barriers are evaporating

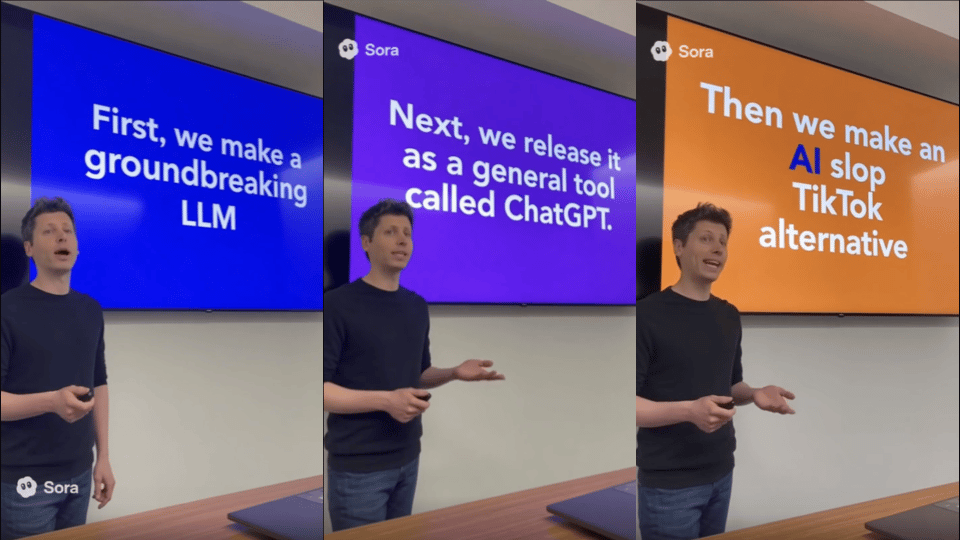

OpenAI’s newest video model, Sora 2, introduces features that turn you (or someone you know) into the star of AI-generated clips, via the “Cameo” feature.

Stills from a Sora video by @t3dotgg featuring OpenAI CEO, Sam Altman.

You can insert your own likeness and voice in an AI video, and post it on a TikTok style Sora social network.

If you thought writing got democratized with GPT, now video follows. You no longer need cameras, crew, or editing rigs. The creative ceiling lowers - and the runway widens.

Watch The Quack, a 2 minute video story posted by OpenAI and be glad that you’re not working in Hollywood.

Weeks like these can make everyone’s heads spin.

But the core technology - such as for video generation, 30 hour coding assignments and automated web browsing - is still auto-complete, continuing to be scaled to greater and greater levels.

The shortcomings of the current generation of AI include that:

It does not think for itself, with its own directed goals

It produces outputs by extrapolating past data it was trained on - mixed with some details you provide in the prompt

It does not learn, distilling past experience into frameworks, to re-apply the lessons to future tasks

The technology will continue to improve in its ability to generate all kinds of digital outputs - from code to video.

Yet, it still needs human input.

Without shaping the right context - it will produce “slop” - generic outputs with little value.

If you want to go deeper, join my complimentary AI Masterclass - where I show teams how to actually get ROI from AI.

Here’s the invite form: https://docs.google.com/forms/d/e/1FAIpQLSf8ZbGGY1ZdaiUxxCOTTS3CIFmkO9f-oSzU0cfYLjO_cQn7dw/viewform

Talk soon,

Dino

ps. If you feel like AI is eating up all kinds of work, and that yours might be next… don’t worry. We’ll be tackling the future of jobs next.